How we performed a live event stream migration in production

“You must unlearn what you have learned.” – Jedi Master Yoda, The Empire Strikes Back

In modern cloud computing, things don’t always start off as the most scalable or stable solution. Mistakes are made, and challenges are faced. Through them, we evolve and what we build becomes more resilient.

This post is about one such experience that we collectively faced as an engineering organization and emerged with a more scalable, efficient, and resilient system. Specifically, it is about how we first evolved our event streaming pipelines that serve one of Freshworks’ marquee developer platform features – Serverless Apps.

Serverless apps and product events

When you perform an action on any Freshworks product (either through the UI or the REST APIs), they generate something called ‘product events,’ which can be treated as a copy of the resource either created, updated, or deleted through said action. The Freshworks Developer Platform has a solution called ‘serverless apps,’ which can listen and act upon these events.

We previously covered the story behind our Serverless Apps here.

How events work

What powers our event pipeline is the widely known open-source distributed event streaming platform called Apache Kafka. Kafka’s distributed nature and message replication provides excellent resiliency along with scalability. By default, the messages persist for a configurable period, providing an extra layer of durability. There are many popular articles out there about how it works, but what one needs to understand, in the most layperson terms, is that there are three parts to it.

The producer ‘produces’ the message (in this case, the ‘event’ payload) and pushes it to Kafka ‘topics’ where the message stays till it is ‘consumed’ (or polled) by a consumer. Different consumers can consume the same message from the topic, but for the most part, the same exact message is usually not polled by the same consumer again and again (this can be done, but it is out of scope for this particular post).

Now, internally for the Freshworks ecosystem, the product (aka Freshdesk, Freshservice, etc.) acts as the ‘producer,’ and we, the Developer Platform, are one of the consumers with a central Kafka cluster shared between us. The platform then uses this event to trigger the serverless apps. This works fine and super.

However, this was not always the case.

We experimented with AWS SQS and ETL transformations (ETL is short for Extract, Transform, Load) to share events between the product and the platform before arriving at the Kafka solution. As it turns out, our biggest and oldest product (Freshdesk) was plagued most by the older solution.

Uncovering the problem

When the Developer Platform was in its infancy, and serverless apps were just scribbles on the drawing board, we were looking for ways to hook on to the data generated by Freshdesk (later on, we would come to call these product events). At the time, we developed a way to extract database change events from the Freshdesk database, filter them out, enrich them and send it to an SQS queue which, finally, the platform polled and invoked the corresponding apps. This system was known as the ETL Poller. It worked for a while but then faced multiple scalability issues. Eventually, most of the products moved to the much more scalable Kafka paradigm.

Everyone involved with Serverless Apps for Freshdesk wanted to move to the new paradigm – the product, the platform, and the people behind them. But due to time and resource constraints, an interim (kind of Macgyvered) solution was created where the ETL poller would push to a Kafka topic, and the platform would rely solely on consuming the Kafka topic. While this toned down the platform’s particular dependency on the poller solution, Freshdesk’s scalability was severely limited due to its dependency on the poller. We called this the V1-Flow.

Over time, Freshdesk could move to the new paradigm (the V2 Flow), where the product was directly pushed to the Kafka cluster. Still, it created a new challenge for the platform where we had to move from consuming the V1 Flow to the V2 Flow in real time without causing any issues to downstream apps. For context, the platform consumes almost 100K events per minute in one data center alone. So if problems occur for even 10 minutes, a million events are already affected. Clearly, we had to be extremely careful.

The Challenges

We had to overcome several significant challenges as we prepared a solution for this live switchover in production.

Challenge #1

The Developer Platform guarantees exactly-once execution of a serverless app per subscribed event generated by the product. This guarantee had to be honored during the transition (when we would be moving from the V1-Flow to the V2-Flow). Freshdesk would be sending the same event to both flows (almost at the exact moment). However, only one of them should end up executing the app.

Challenge #2

The ETL Poller performed several transformations to the event payload generated by Freshdesk. The platform would essentially pipe the event payload to the specific apps (and other internal services) that need the payload. The most significant was data truncation in the case of enormous payloads ( > 512 KB). This allowed the downstream consumers to process the payloads through various AWS services. The most significant was AWS Lambda (the async execution has a hard input size limit of 128KB). The V2-Flow had no such accommodations.

So if the platform tried to directly pipe a V2 event payload (as we did with the V1 payload), the downstream apps and services would not be able to process the payload due to the inherent limits of said services.

Challenge #3

The transformations mentioned above were not uniformly implemented in the V2-Flow because it evolved from a completely different code base in Freshdesk, and many other consumers had influenced the payload format for the V2-Flow already before the Developer Platform started considering consuming.

This again meant that if the platform tried to directly pipe a V2 event payload to a downstream app, it would not be able to recognize the payload until we mimicked the transformations the ETL Poller was performing.

As we learned from our early tests, this parity-seeking exercise was a moving target, and we often found new attributes in the V2 payload that was out of sync with the V1 payload in new ways.

Challenge #4

Thanks to the unpredictability introduced by Challenge #3, we wanted to have the ability to roll the V2-Flow out to customers one by one while monitoring the reliability and payload schema accuracy of event delivery for each customer. If we found any issues with our downstream serverless apps, we would want a way to roll back to the V1-Flow for that particular customer. To add to the complexity, we wanted to do this exercise across five event types -> ticket_create, ticket_update, note_create, contact_create, and contact_update.

Challenge #5

To make matters even harder, one of our biggest customers requested us to accommodate massive scaling requirements for the upcoming IPL season. They wanted it to be done within a short timeframe. We knew we could not serve this demand with the V-Flow, so our scope for experimentation suddenly became very time-boxed.

The Plan

To understand what we ended up doing, one needs to understand what a typical event payload looks like.

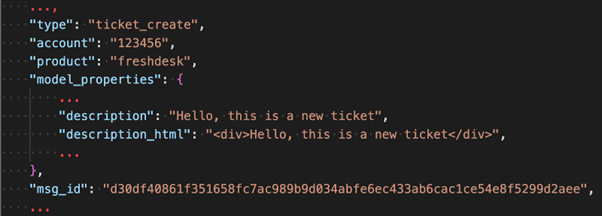

Freshdesk would push the same payload to both streams (V1 and V2 Flows). The V1-Flow would enrich the model_properties key with a few more specific data fields, and the V2-Flow would receive all of the data directly from Freshdesk (remember we try to pipe this data almost unchanged to the app, see Challenge #3).

What is essential is that the type, account, product, and msg_id properties are unique to each payload. The msg_id is a unique identifier corresponding to a unique action on the product side. Two payloads with the same msg_id would be pushed to both flows.

Relying on the uniqueness of the msg_id and aspiring to offer the Developer Platform’s guarantee of exactly-once app execution for a product-side action, we needed to build a mechanism where we only executed an app once per msg_id (keep in mind Challenge #1).

Now, the V1-Flow also had a logic where it would truncate the description and description_html if they crossed ten thousand characters, respectively. The V2-Flow would directly push all this data to the platform through Kafka without truncation (consider Challenge #2).

Remember that we ideally want to perform this V1 to V2 migration seamlessly without any adverse or even visible effects on the Freshdesk support portals of our customers. We wanted to do this carefully for every customer and every event type we were dealing with (i.e., five events per customer account).

We realized we needed a low latency mechanism to store minute configurations for thousands of customers that could be easily flipped in real time. Additionally, we needed to track each msg_id we consumed for some time to guarantee that the events were executed exactly once. We selected Redis for this purpose owing to its low latency and because it was already conveniently integrated with our systems.

We ended up making two primary changes to our Kafka consumer:

- To address Challenge #1, we kept track of the msg_id in Redis with a time-to-live (TTL) of 2 hours. This guaranteed we didn’t process the same event from both streams.

Example: Let’s say this pair of events is being processed one after the other (within the TTL of 2 hours)

(from V2-Flow) consumed msg_id: 123abcde4

-> check Redis for existence of 123abcde4

-> does not exist, message to be processed further

-> set key 123abcde4 in Redis with a dummy boolean value (true)

-> message processed further

(from V1-Flow) consumed msg_id: 123abcde4

-> check Redis for existence of 123abcde4

-> key exists, payload not processed

2. For challenge #4, we kept track of the event name and the account in a key-value pair (key: string, value: boolean) to track which account was being fed with events from any of the two streams. True represented V2-flow, while false represented V1- flow.

Example: freshdesk#123456#ticket_create -> false

freshdesk#123456#ticket_update -> true

If the key did not exist in Redis we defaulted to the V1-flow.

The above keys in Redis would represent that the serverless apps in account 123456 would get the ticket_create event from the V1 flow while the ticket_update event would be fetched from the V2 flow.

3. For the V2-flow payloads, we ported the equivalent truncation and other transformational logic (trying to preserve as much raw data as possible) as was done in the ETL poller system to address Challenge #3 and Challenge #4.

The Execution

The execution was no simple fare and was divided into four major phases:

- Phase one: Coding, Review, Testing:

It almost seems redundant to mention that relatively mundane activities were part of this effort – code changes, reviews by senior engineers, and functional evaluation by SDET engineers.

During this period, we emphasized automating the laborious task of verifying that both flows generated the same payload for the serverless app. This was done by writing a test Freshdesk app which would match the app payload with a static JSON schema (generated from existing event payloads). The Redis configuration switch (see Point 2 in the preceding section) was used to switch the test Freshdesk account as part of the automation between the V1 and V2 Flows throughout the test cycles (this also tested the switch itself).

- Phase two: Preparing the Infrastructure

This includes the following steps.- Populating the configuration of the accounts in Redis: We procured a list of paid accounts from the Freshdesk team, generated the configuration keys for all the event types, and set a default value of False for these keys in Redis (using a NodeJS script).

- Scaling the infrastructure: Freshdesk was and remains our largest event producer (contributing to more than 60% of our event throughput). Adding the new V2-Flow topics effectively increased our load 2X (with some room for load spikes). We anticipated this and, thus, scaled our application infrastructure by the same factor.

- Adding the load: Adding the V2-Flow load abruptly might lead to unforeseen consequences. We had to introduce three new topics to our consumers as part of the migration. We went slowly and added the topics one by one, with ample monitoring time between each topic addition.

- Deploying the code: The code changes to process V1 and V2 Flows simultaneously were deployed after a,b & c were done. The state of the Redis configuration switches was set as False; hence, the paid accounts got directed to the V1-Flow as soon as the code changes were deployed. The accounts for which keys did not exist in Redis defaulted to the V1-Flow.

- Phase three: Migration

We did the migration by changing the value of the Redis configuration switches in batches using a NodeJS script. Batches were initially small (1 or 2 accounts), but later, with confidence, we expanded batches to span individual Subscription Plans accounts were subscribed to. We also planned to migrate one data center at a time, except for the one customer mentioned in Challenge #5 who needed to be flipped in our busiest data center with an impending IPL workload. We continuously monitored for any errors in our services and downstream apps and kept an eye out for customer complaints. Fortunately, the migration was completed without a hitch. This gave us enough confidence to move the entire stream to V2 and retire V1.

- Phase four: Clean-up

After all accounts were migrated, we had to perform the clean-up. This phase primarily involved removing the V1 topics and changing the consumer logic to stop relying on the Redis configuration switch and use only the V2 event payloads. After this was done, our consumers’ load decreased significantly, and we could scale the application infrastructure back to usual numbers.

The Lessons

As a platform, we understood the value of a few things, which we now inculcate in our day-to-day work:

- Scalability should always be addressed when designing a Minimal Viable Product: In our earlier days as a startup, we naturally tended to delay the scalability aspects whenever we gave the go-ahead for an MVP. The V1-Flow would be an example of a solution where we simply had no idea how far it would scale since we had no metrics about the same. However, in today’s rapidly growing Freshworks, these choices can quickly become bottlenecks. The V2 Migration work proves that this would demand painful and elaborate migration projects.

- A feature management tool is an excellent investment: It would have made rolling out such changes much more effortless, owing to their support for various roll-out strategies that work at scale. What we built using Redis above ended up replicating a lightweight feature management service.

- Collaboration and consistency are crucial elements: This entire change would not be possible without the effort and expertise of both the Freshdesk product and platform engineering teams. Only with consistent collaboration and communication, spanning many months, were we able to pull off such a significant change without our customers even noticing.

- Defensive programming: The V2-Flow was essentially something the platform was encountering for the first time to replace an existing source of data, i.e., the V1-Flow. The adoption of principles of defensive programming, such as securing the code for unexpected inputs and ensuring the code was well-tested and predictable in its output, ensured the success of this project.

Conclusion

We completed this migration back in September 2020. We have not noticed any problems or heard from our customers owing to the migration. In fact, we now process close to a couple billion serverless app invocations every month. In a way, we can rejoice that the migration was a great success of engineering and human efforts, and we are simply reaping the benefits now. We eagerly look forward to solving our next scale challenge and sharing our lessons here soon! As we continue integrating Freshworks’ leadership competencies, this example reflects how we have focused on innovation, driving results, staying customer-focused, and being collaborative.

Contributing author: Prithvijit Dasgupta was a Senior Software Engineer at Freshworks. You can reach out to him on LinkedIn.